We tend to look towards science as a source of factual and trusted information and largely build our society based on the truth produced by the scientific process. Examples include the societal changes induced by findings related to the climate crisis or the COVID-19 pandemic. The trust in science stems from the perceived rigor of the scientific method and the notion of quality control by well established publishers which filter the work of scientists for soundness and relevance. Over the years the current system of scientific publishing has been criticized increasingly by scientists themselves. Here I will list the 7 biggest problems I have come across in my very early career in science.

- Publication bias. Publication bias arises when the probability that a scientific study is published is not independent of its results. In practice this occurs when the quality control in the form of journal/conference acceptance tends to favor positive or novel results over negative or reproduced results. For example it will be easier to publish the discovery of a new compound acting against a certain disease than discovering that a compound does not have any effect on a disease. While publication bias is largely induced by the acceptance decisions of publication outlets it has also had the effect on authors that they do not consider to even submit negative results or put significant effort into replication studies. This reluctance to communicate “uninteresting” results is sometimes referred to as the “file drawer problem” and it can be assumed that it is strictly linked to the incentives created by the publication bias.

- Misaligned incentives. “Publish or Perish” is a pervasive aphorism which describes the pressure which emerges from the requirement to publish certain quantities of articles in high-ranked journals or conferences in academia. It is often claimed that this pressure creates misaligned incentives where less rigorous work or even data manipulation is encouraged in favor of higher quantity of publication output. Such incentives are clearly orthogonal to the official mandate of science to exhaustively check the factualness of claims.

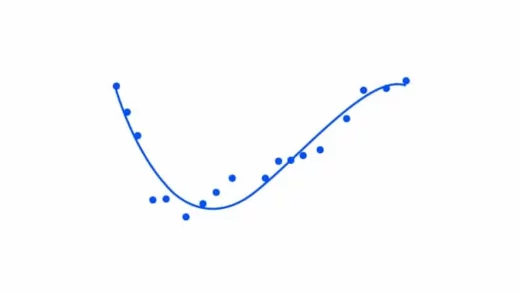

- Irreproducibility. Problems 1 and 2 are likely causes for the often lamented “replication crisis”. This term denotes the problem that many published scientific results can not be replicated by other scientists in the same area. Together with the presumable root problems it likely led to the situation that only small numbers of replication studies are carried out which is detrimental to the reliability of scientific knowledge. Higher reproduction rates would also make it easier to spot scientific misconduct which is unfortunately prevalent in the system.

- Inconsistent peer-reviews. The concept of peer-review exists in a variety of forms to filter potential publications according to standards of quality, correctness and novelty. To reduce bias peer-review is largely held in “single-blind” mode or “double-blind” mode meaning that either just the identity of the reviewers is kept secret or both the authors and the reviewers stay anonymous. While peer-review is deemed necessary by most scientists, it is also seen as insufficient in many aspects and a source of frustration for many researchers. One of the biggest criticisms of the peer-review system is inconsistency i.e. the decision of acceptance of a reviewer is arbitrary and does not strictly depend on the quality of the publication. For example one study found that eight out of twelve psychology papers were rejected for methodological flaws when resubmitted to the same journals in which they had already been published.

Furthermore, anonymity of the reviewers clearly reduces accountability which can lead to low effort reviews as there is no reputation at stake and effort is mostly incentivized by moral principles. At the same time, the anonymity of the reviewers (and the authors in the double-blind case) is fragile as it is entirely based on trust. The identity of the reviewer is known to the journal editors which pick the reviewers. This means that their identity is constantly in danger to be compromised by deliberate leaking or data breaches. On top of these problems, peer-review is also susceptible to malicious attacks through the creation of fake peer-reviewers. These types of fraud do not require sophisticated methods considering that in some areas it is fairly common practice for editors to ask the authors themselves for reviewer suggestions. - Delays in the publication process. The time it takes from submission of an article to its actual publication by a journal can be substantial. Björk and Solomon report a mean delay of 12 months from submission to publication across a wide range of disciplines and journals with a standard deviation of 7 months. The reasons for this are manifold but mostly reside in the large number of people involved in the peer-review and editing process. A big problem with this is that it all happens in obscurity from peers and public. While such a covertness can inhibit the spread of misinformation in some cases in other cases it can unnecessarily stall the publication until the content becomes nearly irrelevant. Significant parts of the delay still stem from relics of the paper journal era where the articles had to be collected first before they could be published as a bundle. This reason for delay should be long abolished and has been in some purely digital journals. It is also counteracted by authors publishing their work on pre-print platforms post acceptance.

- Lack of transparency. As already indicated in the description of problem 4 and 5 many of the processes involved in the publication do not follow transparent rules and are kept obscure at least until the publication. Peer-reviews are mostly held under covers even after publication as are the initial reason for consideration, the selection of peer-reviewers and the rationale behind the publication decision. Acceptance decisions are taken by the publishers based on peer-review feedback. However, the link between review feedback and the decision is entirely based on trust. For example, there is nothing stopping publishers from systematically desk-rejecting submissions based on their personal preferences or undisclosed guidelines. In fact editors likely have their own hidden motives which are usually slanted towards maximizing the impact factor and profit of the journal. Wrapped around the peer-review the bigger editorial process stays an intransparent bottleneck where any effort to reduce bias can be rendered ineffective.

- Fake publications. Apart from the largely unintentional flaws of the system there are unfortunately also deliberate fraud schemes which are encouraged by the same incentive system which lies at the root of many of the above problems. Large scale production of fake publications is linked to the emergence of so called “paper mills” which are specialized organisations producing deceptively convincing publications of low quality or with outright fake results. The scope of the problem is hard recognize but according to a recent study the number of fake publications could be as high as 23% in the biomedical domain in 2020 and anywhere between 2% and 46% in different journals and areas. The strategy of these paper mills exploits the intransparent bottleneck described in problem 6 by bribing editors to publish their papers with money and guarantees of impact factor gain. As more and more of these paper mills seem to pop up it becomes important to discuss possible countermeasures to this problem. Another facilitating factor for fake or low quality publications are “predatory journals” which accept articles for publication without performing thorough quality checks or peer-review. Predatory journals can be notoriously hard to spot due to the described intransparencies common to practically all publication outlets.